Huawei Unveils Multiple Chips in One Go

Huawei remains committed to monetizing Ascend hardware;

The CANN compiler and virtual instruction set interfaces will be opened up, while all other software will be fully open-sourced. The open-sourcing and opening-up of CANN based on Ascend 910B/C will

be completed by December 31, 2025. In the future, open-sourcing, opening-up, and product launches will be synchronized;

The Mind series application enablement suites and toolchains will be fully open-sourced, with completion by December 31, 2025;

The openPangu foundational large model will be fully open-sourced.

"Although the model pioneered by DeepSeek can significantly reduce computing power requirements, to move toward AGI (Artificial General Intelligence) and physical AI, we believe that computing

power has been, and will continue to be, the key to artificial intelligence—even more so for China's artificial intelligence. Among other things, the foundation of computing power lies in chips, and

Ascend chips form the basis of Huawei's AI computing power strategy.

Since the launch of the Ascend 310 chip in 2018 and the Ascend 910 chip in 2019, Huawei's Ascend progress has attracted attention, especially with the large-scale deployment of the Ascend 910C chip

alongside the Atlas 900 super node in 2025. For this reason, in his speech today, Xu Zhijun stated firmly: 'Ascend chips will continue to evolve, laying a solid foundation for AI computing power in China and beyond."

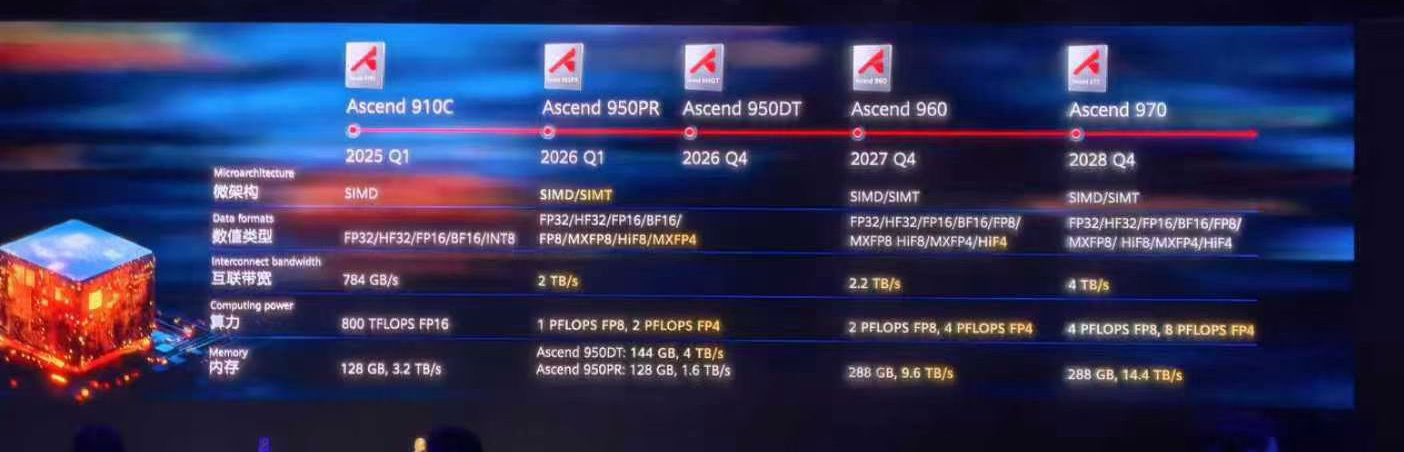

It is reported that over the next three years, Huawei has developed and planned three chip series, namely the Ascend 950 series, the Ascend 960 series, and the Ascend 970 series. The Ascend 950

series includes two chips: Ascend 950PR and Ascend 950DT. More specific chips are still in the planning stage. Below, I will introduce four Ascend chips that are soon to be launched or already in the

planning phase respectively.

Among them, the Ascend 950 series is currently under development by Huawei and will be launched soon. Compared with the previous generation of Ascend chips, the Ascend 950 has achieved

fundamental improvements in the following aspects:

It newly supports industry-standard low numerical precision data formats such as FP8, MXFP8, and MXFP4. Its computing power reaches 1P and 2P respectively, enhancing training

efficiency and inference throughput. It also specifically supports Huawei's self-developed HiF8, which maintains the high efficiency of FP8 while achieving precision very close to

that of FP16.

It has significantly improved vector computing power. This is mainly achieved through three aspects: first, increasing the proportion of vector computing power; second, adopting an innovative new isomorphic design that supports both SIMD and SIMT dual programming models—SIMD can process "large-block" vectors like an assembly line, while SIMT

facilitates flexible processing of "fragmented" data; third, reducing the memory access granularity from 512 bytes to 128 bytes, enabling more precise memory access and thus

better supporting discrete and non-continuous memory access.

Its interconnection bandwidth has increased by 2.5 times compared with the Ascend 910C, reaching 2 TB/s.

It caters to the different requirements for computing power, memory, memory access bandwidth, as well as recommendation and training across various stages of inference.

"We have independently developed two types of HBM, namely HiBL 1.0 and HiZQ 2.0. These self-developed HBMs are co-packaged with the Ascend 950 Die respectively to form two

chips: the Ascend 950PR, which is designed for prefill and recommendation scenarios, and the Ascend 950DT, which targets decode and training scenarios," Xu Zhijun stated.

It is reported that the first chip in the 950 series is the Ascend 950PR, which is mainly targeted at the inference Prefill phase and recommendation service scenarios.

When talking about the positioning of this chip, Xu Zhijun stated: With the rapid development of Agents, the input context has become increasingly longer, and the first Token

output phase consumes more and more computing resources. Secondly, in business applications such as e-commerce, content platforms, and social media, recommendation

algorithms are required to have higher accuracy and lower latency, leading to a growing demand for computing power. Both the inference Prefill phase and recommendation

algorithms are computationally intensive, requiring high parallel computing capabilities but relatively low memory access bandwidth. Through a hierarchical memory solution, the

demand for local memory capacity in the inference Prefill phase and recommendation algorithms is also relatively low. The Ascend 950PR adopts Huawei's self-developed low-cost

HBM, HiBL 1.0. Compared with high-performance and high-priced HBM3e/4e, it can significantly reduce the investment in the inference Prefill phase and recommendation services.

It is revealed that this chip will be launched in the first quarter of 2026, and the first supported product forms will be standard cards and super node servers.

As for the Ascend 950DT, compared with the Ascend 950PR, it focuses more on the inference Decode phase and training scenarios. Since the inference Decode phase and training

have high requirements for interconnection bandwidth and memory access bandwidth, Huawei has developed HiZQ 2.0, which enables the memory capacity to reach 144GB and

the memory access bandwidth to reach 4TB/s. At the same time, the interconnection bandwidth has been increased to 2TB/s. Secondly, it supports FP8/MXFP8/MXFP4/HiF8 data

formats.

The Ascend 950DT will be launched in Q4 2026.

The third chip is the planned Ascend 960. According to reports, this chip will double specifications such as computing power, memory access bandwidth, memory capacity, and

number of interconnection ports compared with the Ascend 950, significantly enhancing performance in scenarios like training and inference. It will also support Huawei's

self-developed HiF4 data format—a current industry-leading 4-bit precision implementation that can further boost inference throughput while delivering better inference

accuracy than industry FP4 solutions. The Ascend 960 is scheduled for release in the fourth quarter of 2027.

The last chip is the also-planned Ascend 970. It is noted that the overall direction of this chip—whose specifications are still under discussion—is to achieve substantial upgrades

across all indicators and comprehensively improve training and inference performance. "Our initial consideration at present is that compared with the Ascend 960, the Ascend 970

will double its FP4 computing power, FP8 computing power, and interconnection bandwidth across the board, and increase memory access bandwidth by at least 1.5 times.

The Ascend 970 is planned to be launched in the fourth quarter of 2028. Everyone can look forward to its remarkable performance then," Xu Zhijun stated.

Xu Zhijun summarized that regarding Ascend chips, Huawei will continue to evolve at a pace of nearly doubling computing power with each new generation, while focusing on

directions such as greater ease of use, more data formats, and higher bandwidth—continuously meeting the growing demand for AI computing power.

Compared with the Ascend 910B/910C, the key changes starting from the Ascend 950 include:

Introduction of the new SIMD/SIMT isomorphism to improve programming ease of use;

Support for a richer range of data formats, including FP32, HF32, FP16, BF16, FP8, MXFP8, HiF8, MXFP4, and HiF4;

Support for higher interconnection bandwidth (2 TB/s for the 950 series and 4 TB/s for the 970 series);

Support for greater computing power (FP8 computing power increases from 1 PFLOPS in the 950 series to 2 PFLOPS in the 960 and 4 PFLOPS in the 970; FP4 computing power rises

from 2 PFLOPS in the 950 to 4 PFLOPS in the 960 and 8 PFLOPS in the 970);

Gradual doubling of memory capacity and a fourfold increase in memory access bandwidth.

As Xu Zhijun stated, with Ascend chips as the foundation, Huawei is able to create computing power solutions that meet customer needs. From the technical perspective of large-

scale AI computing infrastructure construction, super nodes have become the dominant product form and are evolving into the new normal for AI infrastructure development.

A super node is essentially a computer capable of learning, thinking, and reasoning; physically, it consists of multiple machines, but logically, it performs learning, thinking, and

reasoning as a single unit. As demand for computing power continues to grow, the scale of super nodes is also expanding continuously and rapidly.

In March this year, Huawei officially launched the Atlas 900 Super Node, which supports a full configuration of 384 cards. As a super node, these 384 Ascend 910C chips can

operate like a single computer, with a maximum computing power of 300 PFLOPS. To date, the Atlas 900 remains the super node with the highest computing power globally. The

CloudMatrix 384 Super Node, a term often heard, is a cloud service instance built by Huawei Cloud based on the Atlas 900 Super Node. Since its launch, the Atlas 900 Super Node

has been deployed in over 300 units, serving more than 20 customers across industries such as internet, telecommunications, and manufacturing. It can be said that the Atlas 900

marked the start of Huawei’s AI super node journey in 2025.

Now, by leveraging Ascend chips that have been launched or are under development, Huawei will bring more super node and cluster products to everyone. We now enter the

most exciting moment of today: the release of new products. Among them, the Atlas 950 Super Node, built on the Ascend 950DT, is the first product launched today.

According to reports, the Atlas 950 Super Node supports 8,192 Ascend cards based on the Ascend 950DT—more than 20 times the capacity of the Atlas 900 Super Node. The

"Ascend cards" we commonly refer to each correspond to one Ascend 950DT chip, meaning 8,192 Ascend cards are equivalent to 8,192 Ascend 950DT chips.

A fully configured Atlas 950 Super Node consists of 160 cabinets in total, including 128 computing cabinets and 32 interconnection cabinets, covering an area of approximately

1,000 square meters. All-clear interconnection is adopted between cabinets. Its total computing power has been significantly enhanced: FP8 computing power reaches 8 ELOPS,

and FP4 computing power reaches 16 ELOPS. The interconnection bandwidth reaches 16 PB/s—a figure that means the total interconnection bandwidth of a single Atlas 950

product exceeds the peak bandwidth of the global internet today by more than 10 times.

At the Huawei Connect 2025 conference, Xu Zhijun, the rotating chairman of Huawei, stated that the Atlas 950 SuperPoD will be launched in the fourth quarter of 2026.

Xu Zhijun said, "We are proud to see that the Atlas 950 SuperPoD will remain the world's most powerful supernode for at least the next several years, and it far exceeds the main

products in the industry in all major capabilities. Among them, compared with the NVL144 of NVIDIA, which will also be launched in the second half of next year, the card scale of

the Atlas 950 SuperPoD is 56.8 times that of the NVL144, the total computing power is 6.7 times that of the NVL144, the memory capacity is 15 times that of the NVL144, reaching

1152TB, and the interconnection bandwidth is 62 times that of the NVL144, reaching 16.3PB/s. Even compared with the NVL576 that NVIDIA plans to launch in 2027, the Atlas 950

SuperPoD is still leading in all aspects."

He also pointed out that the significant enhancement of the computing power, memory capacity, memory access speed, interconnection bandwidth and other capabilities of this

node has brought a significant improvement to the training performance and inference throughput of large models. Compared with the previously launched Atlas 900 SuperPoD,

the training performance of the Atlas 950 SuperPoD has increased by 17 times, reaching 4.91M TPS. By supporting the FP4 data format, the inference performance of the Atlas 950

SuperPoD has increased by 26.5 times, reaching 19.6M TPS.

Xu Zhijun emphasized that the 8192 - card supernode is not the end for the company, and Huawei is still continuing to work hard. The Atlas 960 SuperPoD, the second supernode

product launched today, is one of the achievements. It is reported that this node is built based on the Ascend 960 and can support a maximum of 15488 cards. The Atlas 960

SuperPoD consists of 176 computing cabinets and 44 interconnection cabinets, a total of 220 cabinets, covering an area of about 2200 square meters.

The Atlas 960 Super Node is scheduled for launch in the fourth quarter of 2027.

With another upgrade in card scale, the Atlas 960 Super Node further strengthens our advantages in AI super nodes. Built on the Ascend 960, its total computing power, memory capacity, and interconnection bandwidth are doubled compared to the Atlas 950. Specifically, its total FP8 computing power will reach 30 EFLOPS, while total FP4 computing power will hit 60 EFLOPS; memory capacity will reach 4,460 TB, and interconnection bandwidth will reach 34 PB/s. Compared with the Atlas 950 Super Node, the large-model training and inference performance of the Atlas 960 will increase by more than 3 times and 4 times respectively, reaching 15.9M TPS and 80.5M TPS.

"Through the Atlas 950 and Atlas 960, we are fully confident in providing sustainable and sufficient computing power for the long-term and rapid development of artificial intelligence," Xu Zhijun said.

Super nodes have redefined the paradigm of AI infrastructure, but their application is not limited to AI. In the field of general computing, Huawei also believes that super node technology can bring great value. From the demand perspective, some core financial businesses still run on mainframes and minicomputers. Compared with ordinary server clusters, these businesses have higher requirements for server performance and reliability—needs that general computing super nodes fully meet. From the technical perspective, super nodes can also inject new vitality into the general computing field.

Therefore, Kunpeng processors will continue to evolve around supporting super nodes, with more cores and higher performance as key directions. At the same time, through the self-developed dual-thread Lingxi Core, Kunpeng processors can easily support more threads.

In Q1 2026, Huawei will launch the Kunpeng 950 processor, available in two versions: 96 cores/192 threads and 192 cores/384 threads. It supports general computing super nodes and adds four-layer isolation for security, making it the first Kunpeng data center processor to enable confidential computing.

In Q1 2028, Kunpeng processors will continue to make breakthroughs in key technologies in areas such as chip microarchitecture and advanced packaging technology, and two

new versions will be launched again. One is the high-performance version with 96 cores/192 threads, featuring a single-core performance improvement of over 50%, mainly

targeting scenarios such as AI hosts and databases. The other is the high-density version with no fewer than 256 cores/512 threads, primarily designed for scenarios like

virtualization, containers, big data, and data warehouses.

At the conference, Xu Zhijun also launched the third product—the TaiShan 950 Super Node built on the Kunpeng 950. As the world’s first general computing super node, the TaiShan 950 supports a maximum of 16 nodes and 32 processors, with a maximum memory of 48 TB. It also supports pooling of memory, SSD, and DPU.

According to reports, this product is not just a technological upgrade in the field of general computing. In addition to significantly improving business performance in general

computing scenarios, it can also help financial systems solve core challenges. Currently, the key difficulty in replacing mainframes and minicomputers lies in the distributed

transformation of databases. However, the GaussDB multi-write architecture built on the TaiShan 950 Super Node requires no transformation while achieving a 2.9x performance

improvement. Ultimately, it can smoothly replace traditional databases running on mainframes and minicomputers. The combination of TaiShan 950 and distributed GaussDB

will become the "terminator" for various mainframes and minicomputers, completely replacing mainframes, minicomputers, and Oracle Exadata database servers across all

application scenarios.

Beyond core database scenarios, the TaiShan 950 Super Node also delivers outstanding performance in a wider range of scenarios: for example, it increases memory utilization by

20% in virtualized environments, and shortens real-time data processing time by 30% in Spark big data scenarios.

The TaiShan 950 Super Node is scheduled for launch in Q1 2026—stay tuned.

"The value of super nodes is not limited to traditional scenarios of intelligent computing and general computing. Recommendation systems widely used in the internet industry

are evolving from traditional recommendation algorithms to generative recommendation systems. We can build hybrid super nodes based on the TaiShan 950 and Atlas 950,

opening up a new architectural direction for the next-generation generative recommendation systems," Xu Zhijun said.

On one hand, with ultra-high bandwidth, ultra-low latency interconnection, and ultra-large memory, hybrid super nodes form a super-large shared memory pool that supports

PB-level recommendation system embedding tables, thereby enabling ultra-high-dimensional user features. On the other hand, the super-large AI computing power of hybrid

super nodes can support ultra-low latency inference and feature retrieval. Therefore, hybrid super nodes represent a new option for solutions targeting next-generation

generative recommendation systems.

Large-scale super nodes have pushed the capabilities of both intelligent computing and general computing to new heights, while also posing significant challenges to

interconnection technology. As a leader in the connectivity field, Huawei is not afraid of these challenges. Xu Zhijun stated that when defining and designing the technical

specifications of the two super nodes—Atlas 950 and Atlas 960—Huawei encountered enormous challenges in interconnection technology, mainly in two aspects:

How to achieve long-distance and high-reliability interconnection. Large-scale super nodes have a large number of cabinets, and the inter-cabinet connection distance is long.

Current electrical interconnection and optical interconnection technologies cannot meet the requirements. Among them, current electrical interconnection technology has a

short connection distance at high speeds, supporting interconnection of only up to two cabinets. Although current optical interconnection technology can connect multiple

cabinets over long distances, it fails to meet reliability requirements.

How to achieve high bandwidth and low latency. The current inter-cabinet inter-card interconnection bandwidth is low, with a gap of 5 times compared to the needs of super

nodes. The inter-cabinet inter-card latency is high; the best current interconnection technology can only achieve about 3 microseconds, which still has a 24% gap from the design

requirements of the Atlas 950/960. When latency is as low as 2-3 microseconds, it is already approaching physical limits—even a 0.1-microsecond improvement poses a huge

challenge.

Leveraging its technical capabilities built over more than 30 years, Huawei has completely solved the problems of current technologies through systematic innovation, exceeding

the design requirements of the Atlas 950/960 Super Nodes and making 10,000-card super nodes a reality.

First, to address the issue of long-distance and high-reliability interconnection, Huawei has introduced high-reliability mechanisms at every layer of the interconnection protocol,

including the physical layer, data link layer, network layer, and transport layer. At the same time, it has integrated nanosecond-level fault detection and protection switching into

the optical path—ensuring applications remain unaffected when optical module flickers or failures occur. Additionally, Huawei has redefined and redesigned optical devices,

optical modules, and interconnection chips. These innovations and designs have increased the reliability of optical interconnection by 100 times, extended the interconnection

distance to over 200 meters, and achieved the reliability of electrical interconnection combined with the long-distance capability of optical interconnection.

Second, to solve the problem of high bandwidth and low latency, Huawei has made breakthroughs in multi-port aggregation, high-density packaging technology, as well as equal

architecture and unified protocols—enabling ultra-large bandwidth of terabyte level and ultra-low latency of 2.1 microseconds. It is precisely through a series of systematic and

original technological innovations that Huawei has overcome super node interconnection technology, met the interconnection requirements of high reliability, all-optical

interconnection, high bandwidth, and low latency, and made large-scale super nodes possible.

To meet the interconnection technical requirements of the Atlas 950/960 Super Nodes and ensure that a 10,000-card super node can function as a single computer, Huawei has

pioneered a super node architecture and a new type of interconnection protocol that can support a 10,000-card super node architecture. The core value proposition of the super

node architecture based on this interconnection protocol is: A 10,000-card super node acts as one computer. In other words, through this interconnection protocol, tens of

thousands of computing cards are connected into a super node that can work, learn, think, and reason like a single computer.

"A 10,000-card super node architecture should have six key features: bus-level interconnection, equal collaboration, full-scale pooling, protocol unification, large-scale

networking, and high availability," summarized Xu Zhijun. Guided by this thinking, Huawei has launched an interconnection protocol for super nodes called "Lingqu" (English

name: UB – UnifiedBus).

The term "Lingqu" evokes the concept of a "thoroughfare connecting nine provinces," symbolizing the connection of large-scale computing power. The Atlas 900 Super Node

based on Lingqu 1.0 has been delivered since March 2025, with over 300 commercial deployments to date—fully validating the Lingqu 1.0 technology. Building on Lingqu 1.0,

Huawei has further enriched functions, optimized performance, expanded scale, and improved the protocol to develop Lingqu 2.0. The previously launched Atlas 950 Super Node

is based on Lingqu 2.0.

Xu Zhijun stated that Huawei will open up Lingqu 2.0 to further promote the development of interconnection technology and industrial progress on a broader scale. Huawei welcomes industry partners to develop related products and components based on Lingqu and jointly build an open Lingqu ecosystem.

"Based on the chip manufacturing processes available in China, we strive to build a 'super node + cluster' computing power solution to continuously meet computing power demands. Today, we have introduced three super node products. Lingqu is not only developed for super nodes—as an interconnection protocol for super nodes—but also the optimal interconnection technology for building computing power cluster products," Xu Zhijun said.

In terms of cluster products, Huawei also brought two products today. The first one is the Atlas 950 SuperCluster 500,000-card cluster!

According to reports, the Atlas 950 SuperCluster is composed of 64 interconnected Atlas 950 Super Nodes, integrating over 520,000 Ascend 950DT chips from more than 10,000 cabinets into a single unit, with a total FP8 computing power of up to 524 EFLOPS. Its launch time will be synchronized with that of the Atlas 950 Super Node, i.e., Q4 2026.

In terms of cluster networking, this cluster supports both UBoE and RoCE protocols. UBoE (UnifiedBus over Ethernet) carries the UB protocol over Ethernet, allowing customers to utilize their existing Ethernet switches. Compared with traditional RoCE, UBoE networking features lower static latency, higher reliability, and reduced numbers of switches and optical modules. Therefore, we recommend UBoE.

Compared with the current world's largest cluster, xAI Colossus, Huawei's Atlas 950 SuperCluster has a scale 2.5 times larger and computing power 1.3 times higher, making it undoubtedly the world's most powerful computing power cluster! Whether for current mainstream training tasks of 100-billion-parameter dense or sparse large models, or for future training of trillion-parameter or 10-trillion-parameter large models, the super node cluster can serve as a high-performance computing power base, supporting the continuous innovation of artificial intelligence efficiently and stably.

At the Huawei Connect 2025 conference, Xu Zhijun, the vice chairman and rotating chairman of Huawei, announced that the Atlas 960 SuperCluster with a million - card scale

would be launched simultaneously based on the Atlas 960 super - node in the fourth quarter of 2027. The FP8 total computing power of this cluster will reach 2 ZFLOPS, and the

FP4 total computing power will reach 4 ZFLOPS. It supports both UBoE and RoCE protocols. With the support of the UBoE protocol, its performance and reliability are better, and

the advantages of static delay and network failure - free time are further expanded, so UBoE networking is still recommended.

Through the Atlas 960 SuperCluster, Huawei will continue to accelerate customer application innovation and explore new heights of intelligence. Xu Zhijun hopes to work together

with the industry to lead a new era of AI infrastructure with the pioneering Lingqu super - node interconnection technology, and continuously meet the growing demand for

computing power with super - nodes and clusters based on Lingqu, so as to create greater value for the continuous development of artificial intelligence.